Table of Contents

A combination of “deep learning” and “fake”, the term deepfakes denotes audiovisual synthetic media produced by deep learning algorithms in which an actor is made to perform and/or speak with the actions and words of a different actor. While the algorithms which are making this possible are not particularly new, certain applications have warranted our undivided attention, especially in the context of disinformation.

Learning to recognize deepfakes can secure individuals’ privacy as well as the company’s reputation and financial stability. Thus, it’s important for businesses to understand what deepfakes are, and the risks they hold. In this article, we’ll take a look at the AI behind deepfakes, and will share some tips on how to recognize them.

Deepfakes in the digital world

In a similar fashion to fake news, deepfakes carry the potential to pollute the digital space with inflammatory discourse and false information. Video content by itself is much more readily absorbed as fact, particularly at this stage where knowledge and understanding about deepfakes are not widespread. The overwhelm of constantly trying to differentiate between real and fake information, regardless of the format, can become a catalyst for a phenomenon called “reality apathy” – an indifference, and numbness surrounding all kinds of truth and lies. The implications of an apathetic society alone are enough to justify devoting resources to combat this information apocalypse. For years now academics have been conducting research on how to spotlight fake news, flag propagandistic content, and automate fact-checking. The problem of deepfakes, however, requires even more complex and involved solutions. All the while deepfake creators are swiftly catching up to them.

First Look at Deepfakes

Deepfakes make their distinctive appearance in late 2017 through a Reddit post featuring explicit fabricated content of famous actresses. Despite the post being taken down, the topic of these non-consensual images quickly became viral and pervaded copious online spaces. That same year, the University of Washington released a deepfake of former U.S. president Barack Obama in an attempt to warn about the potential dangers of this technology. Both events demonstrate their prospective harmful effects. In 2018, filmmaker Jordan Peele used a deepfake of Obama to advise, “We’re entering an era in which our enemies can make anyone say anything at any point in time.” Unfortunately, in the years since, political influence and involuntary pornography remain the two most prevalent functions of this technology in terms of the negative impact. Financial fraud follows suit on the list.

The Good about Deepflakes

There is another side to deepfakes, however. As the algorithms behind them are open source and available online, amateurs have taken to this opportunity as a new means of entertainment. In the deepfake meme library, you will commonly find crossovers between film/TV and actors such as Jim Carrey as Jack in the „Shining“ or Snoop Dog doing tarot card readings. Large industries such as film, education, and healthcare, as well as business fields like fashion and e-commerce, are actively exploring what deepfake technology can do for them. On the surrealist artist’s 115th birthday, the Dalí Museum presented its visitors with an engaging life-like Salvador Dalí. The project, called “Dalí Lives”, was created in partnership with the advertising agency Goodby Silverstein & Partners and claimed numerous awards for creativity and innovation. VocaliD is an AI voice company, that provides millions of people who rely on synthetic speech with a unique voice.

In 2019, the non-profit organization Malaria No More collaborated with video creation platform Synthesia to “teach” David Beckham to speak 9 languages in a global awareness campaign about the deadly disease. Then, in his 2022 “The Heart Part 5” video, artist Kendrick Lamar seamlessly transforms into Kobe Bryant, Kanye West, and Will Smith among others. The video is an exemplary testament to the capabilities of deepfake technology and was met with critical acclaim.

HOW ACCEDIA MAKES AI ACCESIBLE

The AI behind Deepfakes

Deepfakes are created by generative neural networks, most often autoencoders or generative adversarial networks (GANs). The relevant algorithms can be divided into two categories depending on the goals of face manipulation – face swapping and face reenactment. Face reenactment aims to manipulate facial attributes such as expression, pose, or gaze of a video or a single image, whereas face swap tries to seamlessly replace a face from a source image with a target face while maintaining the realism of the facial appearance.

Face-swapping

The face-swapping algorithms are mostly based on autoencoders. Autoencoders are networks that consist of an autoencoder and a decoder component. The lower-dimensional space between them is called a latent space. Latent features from this space are first extracted from the image by the encoder and then input to the decoder to reconstruct the original image. For face swapping two encoders with the same weights are trained to extract features from the source and target faces. Then the extracted features are fed to the decoders to reconstruct the faces. More specifically, autoencoder A is trained only with the faces of A while autoencoder B is trained only with the faces of B. When training is complete, the latent representation generated from encoder A will be passed to decoder B. If the model is any good, these latent features would represent facial expressions and the reconstructed B face will mimic face A. In summary, face swapping uses a source and a target video to “map” faces between the frames of the two videos.

Face reenactment

Face reenactment employs monocular face reconstruction to obtain a low-dimensional parameter representation of the source and target videos. Monocular face reconstruction is a computer vision task, which aims to recover 3D face geometry from a single RGB face image. To perform face reenactment, scene illumination, and identity parameters are preserved, while the head, pose and expression are changed. Afterward, synthetic images of the target actor are regenerated based on the modified parameters. Finally, a rendering‐to‐video translation network is applied to generate realistic videos from these images with a high degree of spatiotemporal continuity.

Current deepfake detection methods generally rely on artificial networks and fundamental image features. These methods can be categorized into five groups. Methods based on general networks, typically a convolutional neural network (CNN), perform frame-level classification. Methods based on temporal consistency look for inconsistencies between adjacent frames by employing recurrent neural networks (RNN). Methods centered on visual artifacts use CNNs to identify intrinsic image discrepancies in the blending boundaries caused by the deepfake generation process. Methods based on camera fingerprints look for differences between faces and backgrounds in traces left behind by the capturing devices. Methods focusing on biological signals exploit the fact that hidden biological signals of the human face (blinking, skin color changes, etc.) are hard for deepfake generation networks to understand and thus replicate. A tendency in deepfake detection research seems to be that when a deficiency of forged imagery is discovered and subsequently detailed in publications, the next deepfake algorithm will overcome it. This type of evolution calls for focusing research on methods, which are not algorithm dependent or trivial in repair.

While We Wait for Automated Detection…

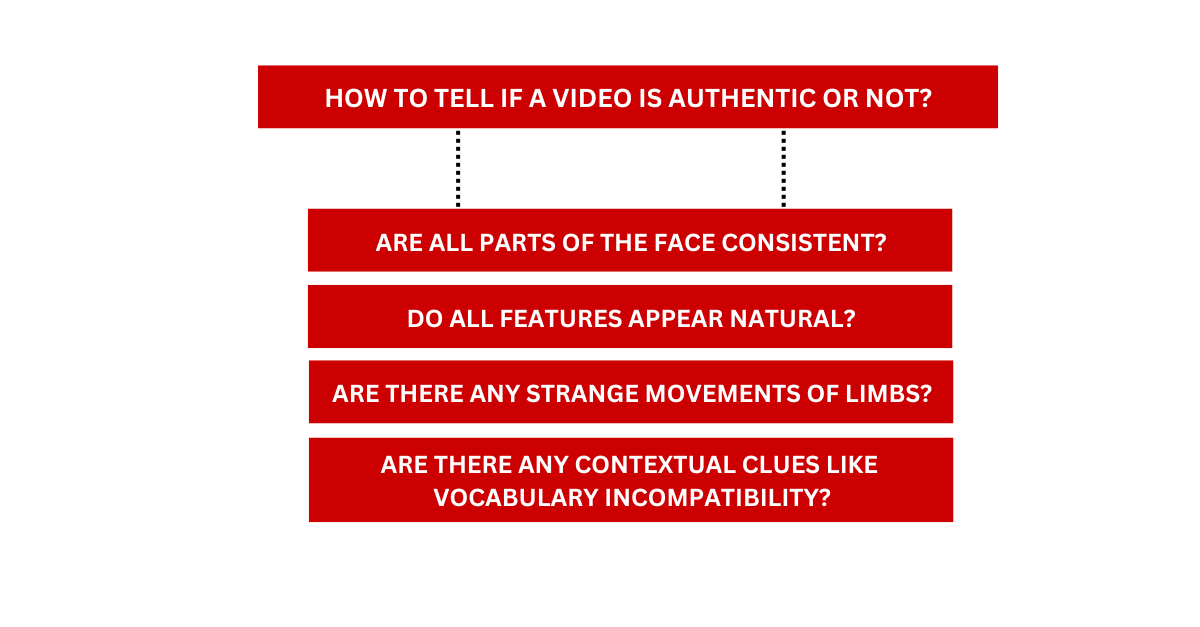

While deepfake detection algorithms are not yet omnipresent and ready to alert us in time, it’s worth discussing practical tips to have in mind when trying to ascertain if we are watching an authentic video or not.

First and foremost – look at the face! Most, if not all, of the tell-tale signs of tampering, will be on or near the face and head. Is the person blinking enough or too much? Are parts of the face (eyes, mouth, forehead, nose) consistent with each other in terms of age, color, etc.? Do features like facial hair, moles, or scars appear natural? Shimmer, distorted or blurred lines of the face are also good indicators of falsification. Look out for strange movements of limbs or even fixed objects in the background. Lighting and shadows can defy laws of physics in manipulated content. Contextual clues such as vocabulary and pattern of speech incompatible with the impersonated figure are equally as important.

While we are staying vigilant, it’s also imperative for government authorities, businesses, educators, and journalists to inform and be informed about this type of threat. Prohibition of fraudulent use of such technologies for commercial, political, or anti-social purposes should be an objective, as well as establishing general regulation.

CYBER SECURITY THREATS AND RISKS TO LOOK OUT FOR IN 2024

Accedia Cyber Security Practices and Fighting Deepfakes

Accedia specializes in providing highly skilled Cyber Security Consultants to combat the escalating threats posed by deepfakes. Our services encompass Security Vulnerability Assessments, aimed at fortifying client applications and adhering to industry standards to ensure the provision of dependable Cyber Security services. Our commitment to mitigating the negative impacts of deepfakes by leveraging our technical expertise is a hallmark of our work.

REQUEST ACCEDIA’S CYBER SECURITY PORTFOLIO

In Conclusion

In conclusion, deepfake technology has both positive and negative implications for society. While it has the potential to revolutionize entertainment and communication, it also poses a significant threat to individuals’ privacy, security, and reputation. As deepfake technology continues to evolve, it is essential to take proactive measures to mitigate the risks and potential harm it could cause. This includes increasing awareness and education about deepfake technology, promoting the responsible use of these tools, and implementing appropriate regulations and laws to prevent misuse. Only through a collaborative effort can we ensure that deepfake technology is used ethically and for the betterment of society.

If you have any further questions regarding deepfakes and AI in general, feel free to contact us!